Fall 2021

On-Device Machine Learning is a project-based course covering how to build, train, and deploy models that can run on low-power devices (e.g. smart phones, refrigerators, and mobile robots). The course will cover advances topics on distillation, quantization, weight imprinting, power calculation and more. Every week we will discuss a new research paper and area in this space one day, and have a lab working-group the second. Specifically, students will be provided with low-power compute hardware (e.g. SBCs and inference accelerators) in addition to sensors (e.g. microphones, cameras, and robotics) for their course project. The project will involve three components for building low-power multimodal models:

(1) inference

(2) performing training/updates for interactive ML, and

(3) maximizing power.

The more that can be performed on device, the more privacy preserving and mobile the solution is.

(1) inference

(2) performing training/updates for interactive ML, and

(3) maximizing power.

The more that can be performed on device, the more privacy preserving and mobile the solution is.

For each stage of the course project, the final model produced will have an mAh "budget" equivalent to one full charge of a smart phone battery (~4 Ah or 2hrs on Jetson Nano, 7hrs on RPi, or 26hrs on a RPi Zero W).

- Time & Place: 10:10am - 11:30am on Tu/Th

- Course questions and discussion: Slack

- GitHub Template: https://github.com/ybisk/11-767-template

Example Industry Motivation "... if the coffee maker with voice recognition was in use for four years, the speech recognition cost for chewing on data back in the Mr Coffee datacenter would wipe out the entire revenue stream from that coffee maker, but that same function, if implemented on a device specifically tuned for this very precise job, could be done for under $1 and would not affect the purchase price significantly. " -- Source

Instructors

Yonatan Bisk

Slack and Course Communication

All course communication will happen via slack including slides and discussions.

Slack

- #general-questions: For questions about lectures, the course, or help from others on class projects

- 🔒group-N: Each group should come up with a name and create their own private channel (invite TAs and instructur). Use the same name for your GitHub fork and pin the link to the channel. Please also invite us to the GitHub. Example: 🔒group-RoboFun

- #hardware/#modality: Hardware or Modality related questions should be asked broadly for anyone to help with.

- Private Messages: If there is a question you would like to address to the instructors, please create a 4-person PM on slack. Please check #general-questions first and post there when possible.

Assignments Timeline and Grading

The course is split half on paper discussion and half projects.| Papers | Project/Lab | ||

| – Participation | 20% | – Lab Reports (1page) | 45% |

| – Paper Presentations | 15% | – Final Report & Presentation | 20% |

Participation in Class or Slack (20%)

Participation is evaluated as "actively asking/answering questions based on the lectures, readings, and/or assisting other teams with project issues". Concretely, this means that every novel question or helpful answer provided in Slack will count for 1%, up to a total of 20% of your grade.

Submission Policies:

- All deadlines are 5pm EST (determined by GitHub commit time)

- Late days: Every team has a budget of 3 late days. They will be automatically calculated based on git commit, after which 2% absolute is removed from max grade.

Projects, Hardware, and Resources

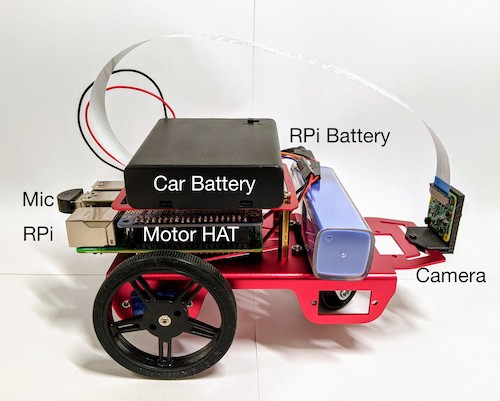

The course will be primarily centered on a few multimodal tasks/platforms to facilitate cross-team collaboration and technical assistance. If your team wants to use custom hardware or sensors not listed here -- that's fine, but please reach out so we can discuss it and put think through the implications. Every team will also be provided with one of the following Single Board Computers (SBCs)Example Projects

| Input | Output | Task |

|---|---|---|

| Speech | Text | Open-Domain QA |

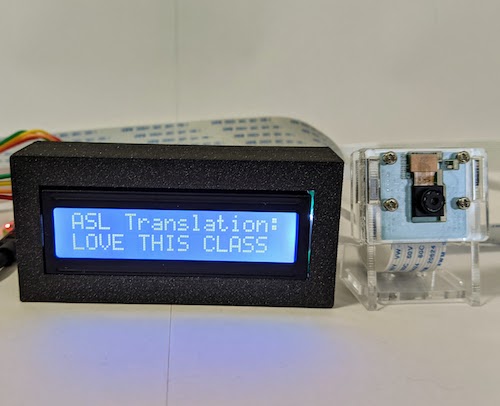

| Images | Text | Object Detection or ASL Finger Spelling |

| Images | Robot Arm | Learning from Demonstration |

| Speech + Images | Robot Car | Vision-Language Navigation |

|

|

Single Board Computers

| SBC | RAM | Notes |

|---|---|---|

| Raspberry Pi Zero W | 512MB | 150mA draw on limited processor |

| Raspberry Pi 4 | 2GB, 4GB or 8GB | 2Amp draw on Moderately powerful processor |

| Google Coral | 1GB, 4GB | Edge TPU accelerator (TFLite) |

| Jetson Nano | 2GB | 128-core NVIDIA Maxwell CUDA cores |

Resources

- TinyML by Warden and Situnayake 2019

- Getting Started with AI on Jetson Nano

- PyTorch Mobile

- ONNX Mobile

- Example DexArm Rotary Code

Classes

| Aug 31: Course structure & Background |

Sept 2: Hardware and Modality choices

|

Sept 7: Understanding the Ecosystem

|

Sept 9: OS and Peripherals setup

|

| Sept 14: TinyML, TFLite, PyTorch Mobile |

Sept 16: Benchmark existing model

|

| Sept 21 Distillation |

Sept 23: Fine-tune pretrained model

|

|

Sept 28: Distillation |

Sept 30: Thinking |

| Oct 5: Quantization |

Oct 7: Project Proposal

|

| Oct 12: Quantization | Oct 14: No class |

| Oct 19:On-Device Computer Vision | Oct 21: Baseline or Hardcoded system

|

| Oct 26: Real Time Speech Recognition | Oct 28: Implementation

|

| Nov 2: Weight Imprinting | Nov 4: Implementation

|

Nov 9: Neural Architecture Search

|

Nov 11: Implementation

|

| Nov 16: Power implications of accelerators | Nov 18: Carbon & Alternative Power

|

| Nov 23: Multimodal Fusion | Nov 25: No class

|

| Nov 30: FPGAs, Batteries, Solar, ... | Dec 2: Concerns Discussion and Final Prep |

| Dec 7: Final Presentation | Dec 9: Final Report due |